Christiane Reves, who lectures in German, helped to develop an AI program called Language Buddy that simulates human conversation.Credit: Meghan Finnerty, Arizona State University

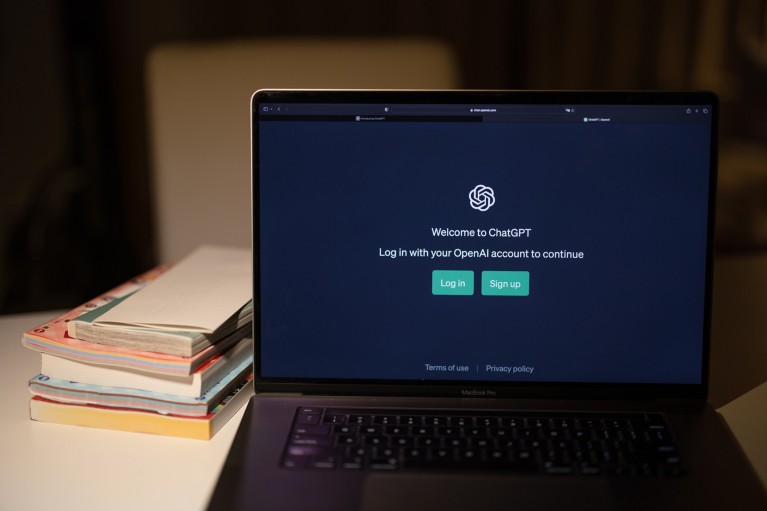

In the early days of generative artificial intelligence (AI), Ethan Mollick told his students to use it freely as long as they disclosed it. According to Mollick, a specialist in innovation and entrepreneurship at the Wharton School of the University of Pennsylvania in Philadelphia, “it worked great when ChatGPT-3.5 was the best model out there”. The program was good, but did not replace students’ input and they still had to edit and tweak its responses to earn a high grade in Mollick’s course. Things changed with the release of ChatGPT-4, the latest version of the chatbot developed by the US tech firm OpenAI. The new AI was noticeably smarter, and it became much harder to distinguish its output from that of a person’s. Suddenly, the program was outperforming students in Mollick’s classes and he knew he had to rethink his approach.

Nature Career Guide: Faculty

Today, academia faces a dilemma as AI reshapes the world beyond the campus walls: embrace the technology or risk being left in the dust. However, as versions of the software become more robust, so does the risk of cheating. According to a 2023 -survey, roughly 50% of students over the age of 18 have admitted to using ChatGPT for an at-home test or quiz or to write an essay (see go.nature.com/4fpwrtv).

Educators today have two options, says Mollick. The first is to treat AI as cheating and intensify conventional measures such as in-class writing assignments, essays and hands-on work to demonstrate students’ mastery of the material. “We solved this problem in math in the 1970s with calculators,” he says. “If cheating is the thing you are worried about, we can just double down on what’s always worked.” The second option, which he terms “transformation”, involves actively using AI as an educational tool.

Lesson logics

The transformative approach is gaining traction on campuses. Innovative educators in various fields, including computer science, literature, business and the arts, are now exploring how AI can enhance learning experiences and prepare students for a technology-driven future. One area in which AI tools have shown particular promise is languages.

“One of the main things you need to learn languages is communication,” says Christiane Reves, who teaches German at Arizona State University (ASU) in Tempe. “‘You need to talk to people and have actual interactions.”

In 2024, Reves decided to build an AI tool to meet this need. Through a collaboration between ASU and OpenAI, she developed an AI program called Language Buddy that simulates human conversation. Students interact with the AI just as they would with a real partner — using it to practise everyday interactions, such as ordering a meal at a restaurant, in spoken German.

If the conversation is too advanced, the student can adjust its complexity. Reves offers the tool for her beginner-level German course. She says it helps students to sidestep the natural anxiety that comes with trying to talk in a foreign language, which is a barrier to people getting the practice they need. Overall, she has been pleased with its performance. “It’s not robotic; it doesn’t just repeat sentences, and it creates a conversation. So you never really know what the next sentence is, which is the natural way,” she says.

The arrival of ChatGPT-4 has made it harder to separate the tool’s output from a real person’s.Credit: Stanislav Kogiku/SOPA Images/LightRocket/Getty

Suguru Ishizaki, an English specialist at Carnegie Mellon University in Pittsburgh, Pennsylvania, uses AI tools to teach new ways of writing. He has spent his career studying the challenges of becoming an effective writer and has now developed a tool to aid the process. Ishizaki says the problem is that inexperienced writers spend too long trying to craft nicely written sentences, which slows the process of getting their ideas onto the page. By contrast, expert writers and authors spend most of their time thinking, planning and preparing their ideas before hammering out prose on the keyboard. The “cognitive load of sentence craft” creates a hindrance to novice writers, which is where AI can help.

David Kaufer, a colleague of Ishizaki’s at Carnegie Mellon, agrees: “In the early writing process, you shouldn’t be worried about polishing. You should be worried about prototyping your ideas. What you need is a quick scribe to get these things down.”

In 2024, Ishizaki and Kaufer released myProse, an AI-powered tool that helps students to focus on their big ideas while AI handles the mechanics, transforming a student’s notes into grammatically correct, smoothly written sentences. They added ‘guardrails’ to ensure that the only information used by the tool used to create prose is generated from students’ notes, and not from its training data, which is taken from the Internet.

Kaufer says that he doesn’t want AI getting in the way of student creativity. AI should be “an attention tool that simply allows the student to attend to the most important things”, he says — namely, the ideas. By letting myProse finesse the sentence structure and produce drafts “efficiently and professionally”, students have more room to nurture their ideas.

He adds that the course is offered to upper-level undergraduates who already have an understanding of sentence crafting and grammar. It is not meant to be a replacement for learning how to write or practising creative writing, and it will be geared towards technical and professional writing. “Our goal is to preserve English departments and writing instruction,” says Kaufer. “AI cannot get in the way of that, but at the same time it is here to stay.”

Changing minds

Despite the exuberance among early adopters of AI, there is still much resistance among faculty members and students. Lecturers need to stay up to date with AI’s new capabilities and adopt it in an iterative way to encourage familiarity with the technology while maintaining the educational experience.

In his classroom, Mollick focuses on assigning projects to challenge students beyond the current capabilities of AI. In one example, he asked students to do things that AI struggles to do, such as building and testing a board game. He also asks students to reflect on how they’ve used ChatGPT, and what they’ve learnt from it, to gauge their thinking process. In another class, he created a tool that simulates job-interview scenarios for his students to get practice. He requires them to turn in the transcript and write a reflection on the exercise. He also assigns oral presentations with question-and-answer sessions designed to assess students’ knowledge and communication skills.

David Malan gave his AI tool ‘guardrails’ to guide students without giving away too much.Credit: Leroy Zhang/CS50

The challenge, according to Mollick, is that the technology advances and adds new capabilities rapidly, and students are adopting it quickly. Educators cannot simply use AI as an add-on to their courses, he says. Instead, they will have to overhaul their curriculum regularly to keep up with the technology. “We will have to do what we do terribly as academics, which is coordinate and move quickly in teaching,” he says. He urges teachers to share and collaborate with each other to maintain up-to-date teaching tools.

David Malan, a computer scientist at Harvard University in Cambridge, Massachusetts, recognizes AI’s immense potential to boost productivity, but thinks it is too powerful for the university setting. In his introductory-level coding course that he uses at Harvard, Malan prohibits the use of general AI tools until the end of the course. Instead, he developed his own tool, which offers a more nuanced approach to AI integration in education.

“We set out to make ChatGPT less useful for students, in the sense of providing them with a specific chat tool that still answers their questions but does not provide them with outright answers to problems, or solutions to homework assignments,” says Malan.

To achieve this balance, Malan and his team applied what he calls “pedagogical guardrails” to the AI, designing his tool to nudge students towards the correct solutions without actually providing them. The tool acts like a tutor that is available 24 hours a day, he says, offering help when students get stuck. It allows students to highlight one or more lines of code and get explanations of its function. It also disables autocorrect and autoformat, but it advises students to improve their coding style in the manner of a grammar-correction function, flagging text that needs improvement. His favourite feature is that the AI answers students’ questions any time of day, any day of the week.

Future visions

For Vishal Rana, a business-management -specialist at the University of Doha for -Science and Technology in Qatar, the biggest challenge is the cheating mindset. Students are wary of AI tools because of concerns about academic misconduct. But Rana stresses that once students enter the workforce, prompting AI is a skill that the job market requires. He thinks that using the technology does not constitute plagiarism, and that the final product should be credited to the person who is prompting and knowing how to ask the right questions. “As an employer, I would rather hire somebody who could ask the right questions and increase productivity in a short time span,” he says.

Vishal Rana says all students will need to know how to prompt AI once they join a workplace.Credit: Vishal Rana

Last year, Rana had a vision of transforming the business-education classroom from a conventional lecture-based environment to one in which students and lecturers worked alongside AI as partners in hands-on projects. Students were required to tap into generative AI programs, such as ChatGPT and Claude (from tech firm Anthropic in San Francisco, California), for many aspects of product development; these included brainstorming, e-mail writing and pitching to investors. The AI created ideas in seconds that allowed students to step into the role of editor, sifting through possible options and finding a feasible one. “Our goal was to create a more dynamic and interactive learning experience that prepares students for the AI-driven future of work,” he says.

In a product-development course, Rana instructed his students to put their real-world skills to the test and conduct in-person interviews with potential clients and stakeholders. The students used AI to write e-mails and generate interview questions, but they were the ones who had to go out and talk to people face to face, which bolstered their social skills. He said that in this case, the AI was working “as a co-pilot in this experiential learning”.

Rana suggests that educators will have to adapt their assignments for use with AI and experiential learning in future. “We suggest to all our academic colleagues [that they] now bring an element of experiential learning into their assessments,” he says. “This will replace old-school report writing and essay writing.”

Malan is optimistic that AI will eventually become a one-to-one teaching assistant — perhaps especially for students who aren’t financially able to go to university. “As much as we are focused on the on-campus students, for us, what’s been especially exciting about AI is the potential impact on self-taught students who aren’t as resourced or as fortunate to live in a part of the world where there are educational resources and institutions,” he says.

“There are quite a few students, young and old, around the world who don’t have a friend, a family member or a sibling who knows more about the subject than they do. But, with AI, they will now have a virtual subject-matter expert by their side,” Malan says.

Source link